Every system transform data into output and that’s why is important to understand the efficiency of our algorithms and data structures according to each solution.

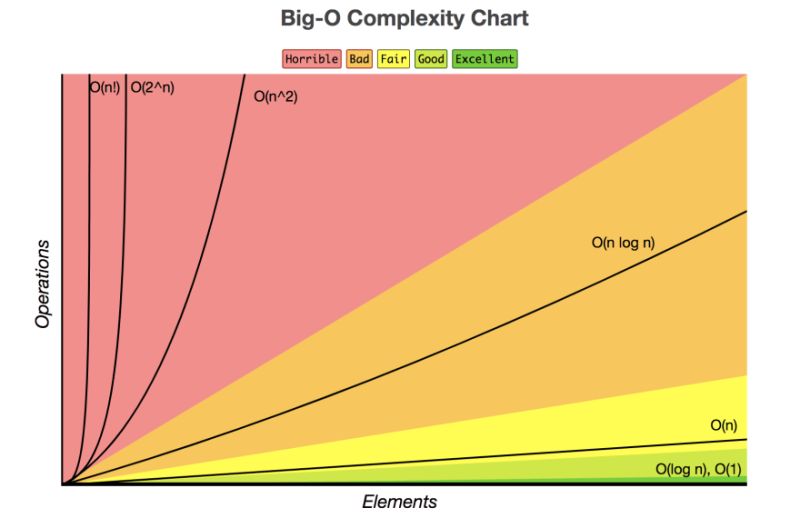

Big O Notation measures the efficiency of an algorithm according to its time and space complexities. The input size of a function can increase linearly and we should be aware of what’s is going to happen with the system in the worst-case scenario. Time complexity is denoted O(…) where the three dots represent some function. Usually, the variable n denotes the input size.

The commonest runtime complexities are:

- O(1) Constant Runtime: the running time of a constant-time algorithm does not depend on the input size

- O(log n) A logarithmic algorithm often halves the input size at each step. The running time of such an algorithm is logarithmic because log2 n equals the number of times n must be divided by 2 to get 1.

- O(n) A linear algorithm goes through the input a constant number of times.

- O(n log n) This time complexity often indicates that the algorithm sorts the input because the time complexity of efficient sorting algorithms is O(n log n). Another possibility is that the algorithm uses a * data structure where each operation takes O(log n) time

- O(n^2) A quadratic algorithm often contains two nested loops

- O(n^3) A cubic algorithm often contains three nested loops

- O(2^n) This time complexity often indicates that the algorithm iterates through all subsets of the input elements. For example, the subsets of {1, 2, 3} are ∅, {1}, {2}, {3}, {1, 2}, {1, 3}, {2, 3}, and {1, 2, 3}

- O(n!) This time complexity often indicates that the algorithm iterates through all permutations of the input elements. * For example, the permutations of {1, 2, 3} are (1,2,3), (1,3,2), (2,1,3), (2,3,1), (3,1,2), and (3,2,1).

And why should we care about Big O Notation? For large applications that manipulate a large amount of data, it’s really important to perform this analysis since inefficient algorithms will impact the performance of the system. Be aware of the effect of the data structures that you’re using in an algorithm since each one manipulates data in memory and performs operations in different ways.

NP-hard problems are an important set of problems, for which no polynomial algorithm is known.