L’ecologia mi sta a cuore, il pericolo che corriamo è concreto. Sensibilizzare le masse su tale pericolo è fondamentale e lottare perché si trovi una soluzione è quantomeno doveroso. È giusto però puntualizzare alcune cose.

È facile andare in piazza e gridare cosa non ci stia bene; no nucleare, no trivelle, no global warming, no esperimenti sulla fusione, no OGM, no impianti che rovinano i paesaggi, no polveri sottili, no traffico, not TAV, no autostrade, no auto elettriche perché le batterie inquinano e l’energia per produrla ha generato inquinamento (perché le auto a diesel o benzina, che fanno?), no pannelli fotovoltaici perché tra 20 anni saranno da smaltire, viva la natura! Che i potenti risolvano il global warming, per Dio, il pianeta è uno solo e non si scherza.

È facile fare appelli collettivi; quando però si tratta di rinunciare noi a qualcosa, di ragionare nell’ottica del singolo, tutti i nodi vengono al pettine.

La nostra vita è fatta d’energia, che non si crea per grazia divina; non parlo di spiritualità, ma di crudi bilanci in Joule ed una manciata (più o meno) di formule fisiche che tengono in piedi l’universo.

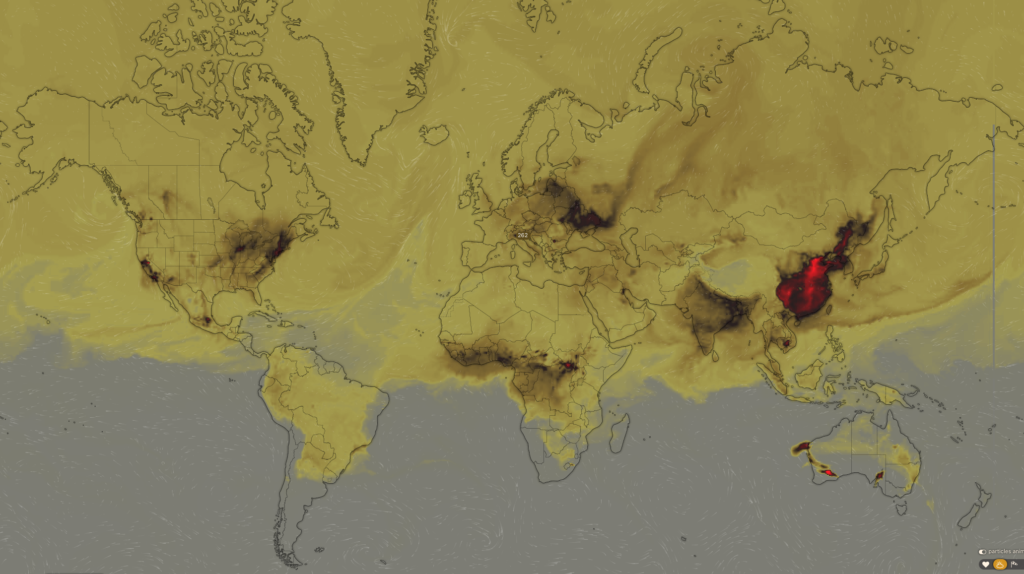

Il cibo che portiamo in tavola comporta un dispendio di risorse e si, anche i cibi vegani, dopotutto al supermercato mica ci arrivano da soli. I nostri spostamenti sono un dispendio di risorse (e di tempo! Non dimenticate mai di considerare il tempo come una risorsa). I nostri dispositivi elettronici da cui dipendiamo sono un dispendio di risorse, pensate a quanto intasate i server con i meme di Messi che si guarda spaesato che spedite o quando consultate i profili degli influencer, è sempre energia che viene consumata. La nostra casa sempre calda, o sempre fresca, o sempre illuminata domanda in continuazione risorse. Questo dispendio, questo benessere e crescita economica ha arrecato danni al nostro pianeta e continua a farlo ogni singolo secondo, giorno dopo giorno, mese dopo mese, anno dopo anno.

Sperate di soddisfare questi bisogni da un giorno all’altro con sola energia pulita – in tempi sufficientemente brevi da scongiurare il disastro? Non succederà. È brutale, ma è così. Non é tecnicamente possibile, è utopico. La nostra crisi ambientale si risolverà solo con compromessi da parte di tutti, non basterà sottostare agli accordi di Parigi. Non fatevi illusioni, non esistono bacchette magiche; potete manifestare quanto volete, ma se davvero credete in quello che fate, preparatevi a cambiare.

Credetemi: quando decidemmo di rinunciare democraticamente al nucleare, nessuno di noi avrebbe però rinunciato alla luce in casa. (considero la scelta di rinunciare al nucleare figlia della follia collettiva e dell’incapacità dei nostri governati di prendere una decisione importante senza guardare al mero consenso elettorale).

Quando affermate di voler interrompere il global warming, pensate di poter allo stesso tempo rinunciare alla vostra carne, alla vostra frutta esotica, alle vostre 4 auto per famiglia, al riscaldamento sempre acceso e al resto delle vostre comodità?

O produciamo energia, o non avremo crescita e benessere e torneremo all’età della pietra. O consumiamo risorse in modo proporzionale alla capacità della produzione energetica pulita, o sporcheremo. O smettiamo di sporcare (o comunque limitiamo in modo deciso il processo), o comprometteremo il pianeta.

Decidete voi dove inserire il compromesso in questo loop.

(Post riarrangiato dal sottoscritto a partire da un post preso da Internet di cui non ricordo la fonte ma che ringrazio infinitamente per la saggezza e la semplicità con cui ha espresso questi concetti [se mi leggi, palesati!]. Btw, no, non è di Greta Thunberg)

[AdSense-A]